Ceph是一个分布式、可扩展、高可用、性能优异的存储系统平台,支持块设备、文件系统和REST三种存储接口。它是一个高度可配置的系统,并提供了一个命令行界面用于监视和控制其存储集群。Ceph还包含认证和授权功能,可兼容多种存储网关接口,如OpenStack Swift和Amazon S3。

Ceph cluster

机器准备,

1 2 3 4 5 6 7 8 ceph 10.10.51.200 mon1 10.10.51.201 mon2 10.10.51.202 mon3 10.10.51.203 osd1 10.10.51.211 (10.10.110.211) osd2 10.10.51.212 (10.10.110.212) osd3 10.10.51.213 (10.10.110.213) osd4 10.10.51.214 (10.10.110.214)

因为ceph-deploy工具是通过主机名与其他节点通信,所以要通过hostnamectl修改主机名,

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 hostnamectl --static set-hostname ceph hostnamectl --static set-hostname mon1 hostnamectl --static set-hostname mon2 hostnamectl --static set-hostname mon3 hostnamectl --static set-hostname osd1 hostnamectl --static set-hostname osd2 hostnamectl --static set-hostname osd3 hostnamectl --static set-hostname osd4 每个节点执行以下命令, ```shell useradd -d /home/cephuser -m cephuser passwd cephuser echo "cephuser ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephuser chmod 0440 /etc/sudoers.d/cephuser yum install -y ntp ntpdate ntp-doc ntpdate 0.us.pool.ntp.org hwclock --systohc systemctl enable ntpd.service systemctl start ntpd.service yum install -y open-vm-tools ## If you run the nodes as virtual machines, otherwise remove this line systemctl disable firewalld systemctl stop firewalld sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config sed -i s'/Defaults requiretty/#Defaults requiretty'/g /etc/sudoers yum -y update

每个节点建立cephuser用户的ssh连接,

1 2 ssh-keygen ssh-copy-id cephuser@osd1

在每个节点执行下面命令,

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 vi ~/.ssh/config Host osd1 Hostname osd1 User cephuser Host osd2 Hostname osd2 User cephuser Host osd3 Hostname osd3 User cephuser Host osd4 Hostname osd4 User cephuser Host mon1 Hostname mon1 User cephuser Host mon2 Hostname mon2 User cephuser Host mon3 Hostname mon3 User cephuser

更改权限,

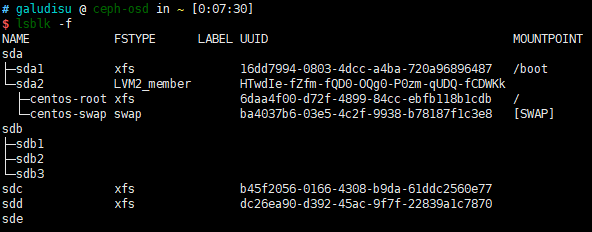

每个OSD节点准备磁盘,

1 2 3 4 5 6 7 parted -s /dev/sdc mklabel gpt mkpart primary xfs 0% 100% mkfs.xfs /dev/sdc -f parted -s /dev/sdd mklabel gpt mkpart primary xfs 0% 100% mkfs.xfs /dev/sdd -f parted /dev/sde mklabel gpt mkpart primary xfs 0% 100% mkfs.xfs /dev/sde -f parted -s /dev/sdb mklabel gpt mkpart primary 0% 33% mkpart primary 34% 66% mkpart primary 67% 100%

使用cephuser账号登录管理节点(即ceph node),创建一个专用目录,

1 2 mkdir ceph-deploy cd ceph-deploy/

在监控节点安装和创建新集群,

1 2 sudo rpm -Uhv http://download.ceph.com/rpm-jewel/el7/noarch/ceph-release-1-1.el7.noarch.rpm sudo yum update -y && sudo yum install ceph-deploy -y

初始化配置,

1 2 3 4 5 6 7 8 9 10 11 12 13 14 vi ceph.conf public network = 10.10.51.0/24 cluster network = 10.10.110.0/24 # Choose reasonable numbers for number of replicas and placement groups . osd pool default size = 2 # Write an object 2 times osd pool default min size = 1 # Allow writing 1 copy in a degraded state osd pool default pg num = 256 osd pool default pgp num = 256 # Choose a reasonable crush leaf type # 0 for a 1-node cluster. # 1 for a multi node cluster in a single rack # 2 for a multi node, multi chassis cluster with multiple hosts in a chassis # 3 for a multi node cluster with hosts across racks, etc. osd crush chooseleaf type = 1

然后,在为个节点安装ceph,

1 2 3 ceph-deploy install ceph mon1 mon2 mon3 osd1 osd2 osd3 osd4 ceph-deploy mon create-initial ceph-deploy gatherkeys mon1

在每个OSD节点创建OSD磁盘,

1 2 ceph-deploy disk zap osd1:sdc osd1:sdd osd1:sde ceph-deploy osd create osd1:sdc:/dev/sdb1 osd1:sdd:/dev/sdb2 osd1:sde:/dev/sdb3

集群创建完后,发现OSD没有开启,这时新版ceph-deploy一个issue,执行下面命令解决,

1 2 3 sgdisk -t 1:45b0969e-9b03-4f30-b4c6-b4b80ceff106 /dev/sdb sgdisk -t 2:45b0969e-9b03-4f30-b4c6-b4b80ceff106 /dev/sdb sgdisk -t 3:45b0969e-9b03-4f30-b4c6-b4b80ceff106 /dev/sdb

重启每个节点,给每个monitor加入systemd,

1 systemctl enable ceph-mon.target

最后,给所有节点部署密钥,

1 2 ceph-deploy admin ceph mon1 mon2 mon3 osd1 osd2 osd3 osd4 sudo chmod +r /etc/ceph/ceph.client.admin.keyring

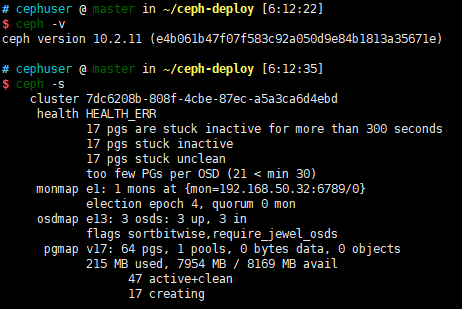

一切如果正常,使用ceph -v和ceph -s会看到,